Official Street Preachers: Bold Evangelism & Public Preaching Resources

Explore unwavering faith, scripture insights, and inspiring stories.

Signed in as:

filler@godaddy.com

Explore unwavering faith, scripture insights, and inspiring stories.

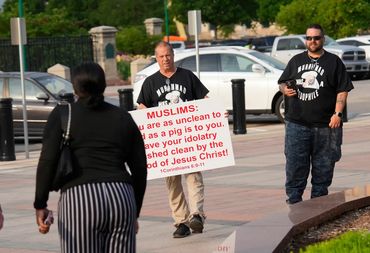

Join us as we boldly share the gospel in the face of adversity. Our mission is to expose evil, preach repentance, and lift up the name of Jesus. Explore our resources on faith, truth, and righteousness. Together, we can stand against sin and promote God’s Word!

At Official Street Preachers, we are committed to taking the gospel to the frontlines. We believe in preaching repentance and exposing evil without compromise, standing boldly for the truth of Jesus Christ.

Sign up to get preaching reports, Bible Teaching, and To Keep Up With Us

Please reach us at rpenkoski@gmail.com if you cannot find an answer to your question.

Add an answer to this item.

Add an answer to this item.

Add an answer to this item.

Send me a message or ask me a question using this form. I will do my best to get back to you soon!

Today | Closed |

By Continuing to use this site, you agree that homosexuals are worthy of death and should not be welcome in your neighborhood or near children

Romans 1:32

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.